In an industrial engineering application, some amount of caution must be used to employ the proper amount of risk assessment theory and practice. To over-spend resources on risk assessment (such as to approach the point where the assessment is using up so much resources that the entire project is unlikely to make any money) is foolhardy and likely to make many investors a lot poorer than they were before1. To under-spend resources on risk assessment is to invite disaster, perhaps even on a catastrophic level. In past large scale industrial accidents, there are two primary contributing factors that are ubiquitous to them all:

The hazards that led to the accident were unknown to the designers. Or...

Although the hazards that led to the accident were known and were controlled, the controls were not properly maintained.

Examples of both cases follow.

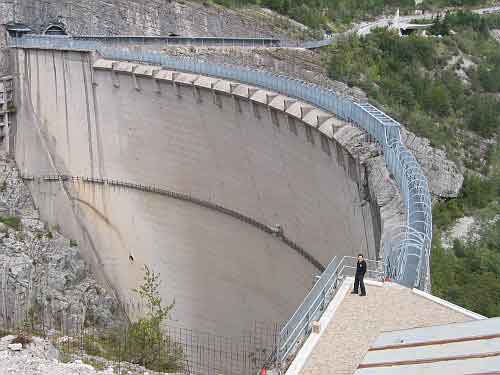

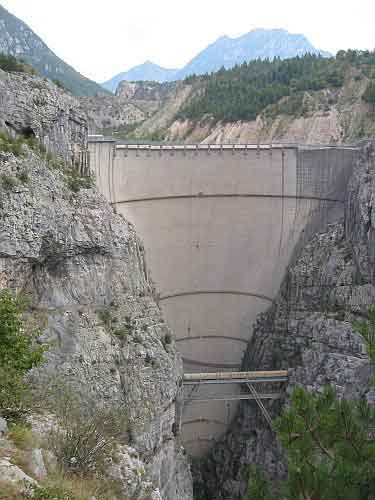

Vaiont Dam Disaster, Italy, 1963

Unfortunately, and sometimes tragically, there is a fundamental danger or risk based on the inherent nature of man's incomplete understanding of the universe around him: the possibility that not all hazards have been correctly identified or risks assessed since the science used in the methods is incomplete or just plain wrong. Such things may be uncomfortable and very difficult for a scientist or engineer involved in risk assessment to account for, but it is a necessary part of our lives in a universe that we do not fully understand. The Vaiont Dam disaster is an excellent example of a failure of the engineers and geologists to understand the nature of the problems they were faced with.

|

At the time of its completion in 1960, the Vaiont Dam was the world's highest thin arch dam2, rising 265 meters above the floor of the valley below. Built to supply hydroelectric power to the rapidly growing large cities of northern Italy, the dam was built in the south-eastern part of the Italian Alps, about 100km north of Venice. At about 10:30pm GMT, on 9 October, 1963, 2500 people were killed when a massive wall of water literally swept their towns away. When rescue personnel and shocked survivors looked up at the dam, they expected to find it not even there at all. They were surprised to find the dam still standing with a minimal amount of damage - but not much water was left in the reservoir. So what had happened?

On the right side of the dam, a huge mass of earth began a landslide that slid about 500m northwards at speeds up to 30 m/s (about 67 miles per hour). The massive hunk of land slid into the reservoir and up the other bank, displacing a large amount of water. The entire landslide lasted less than a minute, during which a massive wall of water from the landslide swept up the opposite bank, destroyed the village of Casso, then toppled over the dam itself and raged through the canyon and onto the villages below. The water, estimated to have a volume of nearly 30 million cubic meters (or about 26% of the capacity of the reservoir), effectively wiped out the villages of Longarone, Pirago, Villanova, Rivalta.

|

Like many major accidents, there were several contributors to the eventual catastrophe. It has been established that one of the key factors was that seismic and geological analyses of the time did not properly indicate that a thin layer of clay existed in the limestone. This provided a sliding surface for the land to slide on, but still: what could have started all that land moving at once, particularly if it had remained stable for thousands of years? The answer lay within the rock and clay itself. Ground water used to seep out of the rock into the clay under normal conditions, but filling the dam had prevented this from occurring. During the first few years of the dam's existence, it was noted that the land would 'creep' along at a slow rate. Lowering the level of the dam seemed to control this. Over the next few years, filling and lowering of the dam varied as the dam's engineers attempted to find the right water level where electricity could be generated and where the land would not slide. However, this practice actually may have contributed to the enormous build-up of hydraulic pressure in the clay layer. Eventually, enough pressure existed such that the effective sheer strength of the clay layer was reduced enough for the massive landslide to occur.

Here is a link to an excellent discussion of the details of the Vaiont Dam disaster.

Massive Toxic Chemical Release, Bhopal, India, 1984

Around midnight on 3 December, 1984, a chemical plant making pesticides in Bhopal, India accidentally released approximately 40 tonnes of methyl isocyanate (or MIC) into the atmosphere. Methyl isocyanate is very toxic to human beings, and a massive and tragic loss of life occurred as a result of the release (it is presently estimated that about 2000 people died and as many as a quarter million were injured). There was also massive damage to livestock and crops in the surrounding environment. Since the exact cause of the accident remains in contention, this entry will not attempt to explain or ascertain the detailed nature of how the accident occurred3 outside of noting that the most popularized idea about how the accident occurred was physically impossible.4What is undisputed is that at some point a lot of water was introduced into a large MIC storage tank, causing a chemical reaction that resulted in the release of MIC and other reaction byproducts that killed many helpless people in the area as they slept. More controversial are the major contributing factors from the accident, specifically the inoperable status of the safety systems designed to mitigate just such an accident (though not prevent it). In particular, the scrubber and flare tower (devices designed and intended to chemically absorb [ie, the scrubber] or burn off [ie, the flare tower] toxic chemicals in the event of an accidental release from the MIC tanks to the vent headers) failed to protect the public from the large cloud of MIC that suddenly came out of the storage tank. It has been - indeed still is - argued that the scrubber and flare tower did not have enough capacity to deal with the large-scale release in the first place.

Beyond the legal entanglements, the accident at Bhopal demonstrates several aspects of the difficulties in performing accident analysis, perhaps most notably the problems and difficulties involved when politics and the news media get involved. Several difficulties were encountered in the investigation stemming from the fact that such a large-scale tragedy had occurred in the first place which resulted in the Indian Central Bureau of Investigation (CBI) launching its own, criminal investigation simultaneously with the UCC investigation. The CBI, perhaps reacting to pressure from its own government, citizens, and media, prevented access by UCC investigators to critical records that prevented UCC from determining the ultimate cause of the event until 1986. The media sensationalizing a simple accident initiator that turned out to be impossible (that is, the notorious 'missing slip-blind during water washing procedures'), compounded the issue tremendously. Furthermore, the psychology of the group of operators and workers surrounding the event itself became such that they apparently generated their own coverup of the accident progression in an attempt to absolve themselves and their co-workers of guilt from such a tragedy, a perfectly normal, albeit unhelpful, human reaction. In the end, it must be noted that such large-scale industrial catastrophes are inevitably without massive complications stemming from the nature of human beings themselves, and the best way to treat accident analyses is in the theoretical future tense rather than the practical past tense. In other words, it is far preferable to ask 'what if' than 'what happened'.

As tragic as the event was, some good has come of the events that occurred that night and the many arguments, legal proceedings, and analyses that have followed. Such issues have led to great improvements in the involvement of the community with the owning and maintaining/operating (M/O) companies of major chemical plants, particularly in the USA. Although there have been accusations of a lack of proper supervision and maintenance of the Union Carbide Corporation (UCC) over the Union Carbide India Limited (UCIL) company that managed and operated the plant in Bhopal, and many issues are still in legal contention, the improvements that have been made almost as a direct result of the Bhopal accident (and ones like it) may have prevented hundreds more accidents from ever happening.

Here are links to websites containing information about the Bhopal accident according to the UCC, and some alternative viewpoints that generally contend those held by the UCC and its investigations.

20-20 Hindsight

After any disastrous industrial accident, the immediate question everyone wants to have answered is 'why?' While many risk analysts are frequently employed in the specialized science of accident investigation, it is not often a glorious or enjoyable enterprise, there being a myriad of challenges to face involving the psychology and politics inevitably entwined with any major disaster. A well-known phrase for the results of accident analyses is '20-20 hindsight', a sometimes deeply cynical and pointed phrase about how people can see what might happen a lot better once it has already happened. However, another phrase goes, 'luck can keep you from making mistakes, but only wisdom can keep you from making them again'. Although little comfort to those that suffer from an industrial accident, it is through the lessons learned from such disasters that we are able to learn, become wizened, and prevent further disasters from occurring.

In the case of the Vaiont Dam disaster, discovering hazards after the accident has occurred represents 20-20 hindsight at its most cruel. Bona fide efforts were made by dozens of very bright people to understand and analyze the problems and effects of building the dam, but a tragedy still occurred. While it is at least annoyingly glib to say, 'expect the unexpected', it is also a logical contradiction and impossible to accomplish in practical risk analysis. In real risk assessment and management, the most common approach to the problem of the unknown is to 'over-engineer' or apply conservative assumptions such that any errors in calculations would be on the side of having extra capacity or margins of safety involved in controls. Indeed, in certain circles it is necessary to maintain the same amount of margin of safety even when new hazardous conditions are discovered. In many cases, the expense of maintaining such a large margin of safety has turned potential disasters into 'near misses' that do not get the attention of much more than a few dozen people.

In the case of the Bhopal, India disaster, it was demonstrated that knowing about hazards and designing and implementing controls for them isn't always enough. The controls need to be maintained with the proper rigour if they are to remain effective. Additionally, those who are accepting the risk need to be made aware of what, exactly, they are at risk for and how it's being controlled. In 1986, the Emergency Planning and Community Right to Know Act (EPCRA) was passed in the USA, and it represents a good result of the Bhopal disaster as well as the 1985 release of methylene chloride and aldicarb oxide from Union Carbide's Bhopal sister plant located in Institute, West Virginia, USA. Further implementation of rules and regulations has vastly improved the maintaining and documentation of the safety 'envelope' of chemical and nuclear processing facilities in the USA5.

Some Problems With People and Risk

Perhaps beyond what any one industry or government's practices which may be to blame for any specific accident there lies two fundamental character flaws common to many human beings.

First, there is the tendency to become complacent about risk controls themselves, or to have a false sense of security based upon a perceived (sometimes subliminally) sense of invincibility. Sometimes, over-engineering safety controls can contribute to this problem, particularly among the most hazardous of operations. In such cases, a blending of human-interactive safety controls (ie, 'administrative' or procedural rules and such) are supplanted with mechanical or engineered safety barriers or controls in order to prevent even the most grossly incompetent workers. In many cases of less severe potential consequences (such as a chemistry or biology laboratory compared to a bomb assembly plant), complacency is a larger problem because there simply are no engineered controls in place to prevent small scale accidents. In such places, complacency with regard to hazards is common and is quite a problem. Several other examples could be made, including safety at construction sites, driving a car, and even working in one's own home. Several approaches can be made towards the problems of becoming complacent around hazards that are ubiquitous to everyday life, either while working or relaxing. The one common thread to them all involves making the people near the hazards recognize them every time they see them. It sounds a lot simpler than it really is.

Secondly, it is a well-known fact that human beings have a tendency to want to gamble. It was stated in the first part of this entry that some view that one form of enjoyment of life is defined by the risks that are taken, the 'competitive spirit', so to speak. To take chances, to 'run risks' as the poignant saying goes, in order to strive for and achieve greater success are indeed characteristics that are frequently considered benefits to the advancement of any society, particularly when that society relies upon the continued development of new and ever-increasingly fantastic technology. However, such an attitude applied to risk-based control selection can lead to a grave abuse of the risk assessment process. Simply put, it isn't the decision to run the risk that is decried by the risk assessment process; it is the act of making the decision without including those that may be directly affected by that decision that the risk assessment process seeks to prevent. Thankfully, such practices are illegal in most industrialized nations of the world and very stiff penalties and fines can (and have been) levied against those that have abused the risk assessment rules and laws, regardless of whether an actual accident has occurred. Risky business is indeed what the actions of an unscrupulous or unregulated industry may be called, the romantic qualities of which are of little comfort when things go horribly wrong.

Related BBC Links

See what BBCi has to say on industrial accidents.

1 A very important exception to this is when it is a government-sponsored engineering application, in which the goal is not to make money but to provide some other function, such as making highways, making energy, and making weapons to protect its citizens.

2 An arch dam is a dam that is curved inwards from the downstream side, or is convex from that point. The term 'arch' is used because the chief engineering principle that allows the structure to hold back such a massive force of water is the principle of the arch. Virtually all modern dams that are of the 'Whoa, that's huge!' size are arch dams, and they are almost always very tall.

3 In the interests of impartiality regarding such a sensitive issue, this entry has deliberately left out the precise details of the accident progression, whether they were determined by the UCC or the CBI with the Indian government, since they tend to contradict each other.

4 The popularized concept at the time was that, during a standard water-washing procedure some distance downstream, a now-notorious 'slip-blind' was missing ultimately causing water to enter the MIC tank and cause the release. It has been demonstrated and documented in the official Bhopal accident case study using verified plant design specifications and engineering calculations that such an event was, in fact, physically impossible.

5 In 1990, while developing the Clean Air Act Amendments (CAAA), the US Senate concluded (based on an EPA analysis) that accident prevention has not been given sufficient attention in the existing Federal Government programmes. As a result, the CAAA tasked the EPA and the Occupational Safety and Health Administration (OSHA) to develop programmes to prevent chemical incidents. With Congressional authorization, the EPA was made responsible for implementing the Risk Management Rule (40 CFR 68, in the US numbering system for laws) to protect the public, and OSHA responsible for implementing the Process Safety Management Standard (29 CFR 1910.119) to protect workers. Most of this Researcher's professional work since 1995 has been directly involved with these rules and the newer (2000) Nuclear Safety Management rule (10 CFR 830) for the Department of Energy's nuclear research laboratories and production facilities.